For this entry I set out to research on current conversations about developments and use cases of AI in the realm of Austrian authors, artists and journalists. Jörg Piringer uses AI in a way that I find fascinating and want to use as a source of inspiration for my own findings of possible use cases. Taking a liking to poetry, Piringer experiments with AI in this art form, exploring its strengths and weaknesses and using those to his advantages. More on his experiments and views on the subject below.

Walter Gröbchen takes a surprisingly economical stance on the current situation of AI, speaking of concerns for the modern technology market with its speedy growth and rising tensions between big players.

Finally, I take a look at Moritz Rudolph’s ‘Der Weltgeist als Lachs’ in which he speaks of a so-called spirit of the world, what defines it, where it spawned, where it has been going and where it could end in the future, comparing it to the life cycle of the Salmon, which returns to its place of birth at the end of its life. He puts China and its rapid technological advances in the center of his attention. This is where the inevitability of AI in the context of industry 4.0 ties into my research topic. I go into more detail below.

Jörg Piringer – Datenpoesie, Günstige Intelligenz

Born in 1974 and self titled musician, IT technician and author Jörg Piringer has been interested in and exploring the connections of technology and the arts, more specifically poetry, since the 1990s. In his 2018 book titled ‘Datenpoesie’, he experiments with a variety of algorithms such as auto-completion, compression and translation algorithms as well as the number based language system of Hebrew to explore how machines understand, evaluate and interpret language and how we can use these technologies to transform language itself.

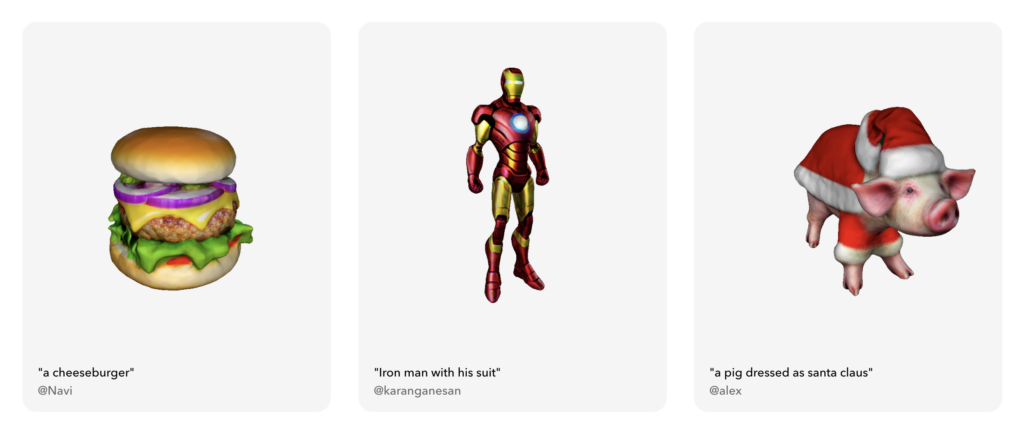

His 2022 book ‘Günstige Intelligenz’ Makes use of the recent developments in the field of AI, more specifically OpenAI’s GPT as well as Chat-GPT. He feeds the model poetic inputs and prompts it to come up with original poetic ideas and even words. In an interview with Günter Vallaster, questions about authorship, AI’s originality, humanity and emotionality are raised. Piringer sees AI as a tool and sees no connection to authorship, given the AI’s lack of emotions and understanding of our world, though he admits to admirable levels of linguistic and especially stylistic understandings of the AI. Furthermore, AI seems to be very capable of recreating human language, however it often succumbs to clichés, stereotypes and rarely surprises with its creations and can rarely be called literature at all. Piringer likes to see this as a feature rather than an unintended lack of performance. All in all, Piringer is unsure yet unfazed about the future of AI, claiming that with any technology comes an inevitable normalisation and further explains that his dystopian and utopian fantasies about AI’s role in our possible future societies he exemplifies in the book are all exaggerated and not to be taken at face value, but serve to start conversations, which is ultimately his goal with the book.

Sources:

Walter Gröbchen – Maschinenraum

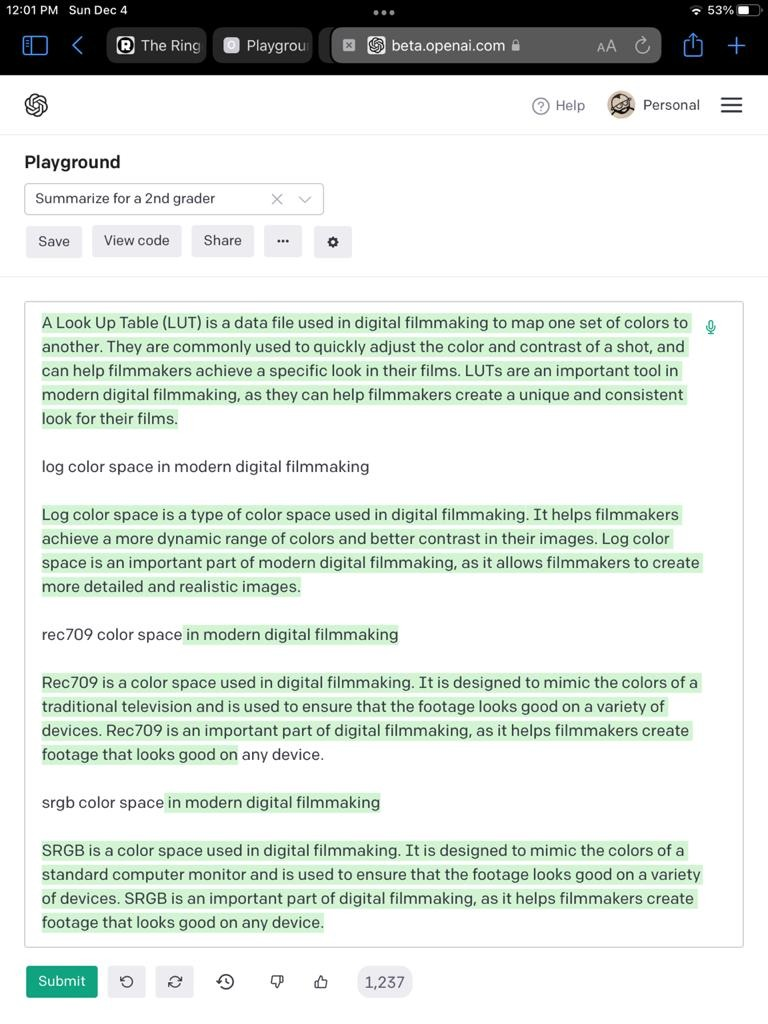

Journalist, author and record label owner Walter Gröbchen frequently publishes his thoughts and findings on technologies and other topics on his glossary ‘Machinenraum’ in the Wiener Zeitung. Writing about technological advances naturally brings up the topic of AI, which he discusses in his article ‘Die Simulation von Intelligenz’. In it, he speaks about the ever growing interest in the pre-trained model GPT with Microsoft, Elon Musk and Peter Thiel planning on investing billions of dollars into OpenAI with respective interests in mind.

He theorises that the recent developments in AI, especially that of ChatGPT with its astounding resemblance of human communication re-ignites a forgotten fantasy of many of the big players of the tech industry; the idea of human-like interactions with machines. The implications and implementations range from simple search engine optimisation to voice activated assistants and smart home solutions. Every big player has their own solutions and versions of these services, a competitive edge in the form of AI would be extremely valuable. This extreme economical value of AI is where Gröbchen’s criticisms start. In his article ‘Der Krieg hinter den Kulissen’ he talks about the silicon and chip shortage that hit the tech industry hard during the last years. Even though the situation has been improving as of late, the tech industry is showing no sign of slowing down, so the emergence of AI and its respective rapid development could be a cause for concern in this regard as well.

Sources:

Moritz Rudolph – Der Weltgeist als Lachs

Born in 1989, Moritz Rudolph has studied politics, history and philosophy and is currently writing his dissertation about international politics in regards to old critical theory and is the author of ‘Der Weltgeist als Lachs’. In the book he talks about the so-called spirit of the world and how it relates to the Salmon, explaining that the spirit of the world seems to like to return to its origins, not unlike the Salmon returning to its place of birth to spawn their eggs or die. One has to be careful with this analogy however, as the spirit of the world returns to its inception point in a revolving manner rather than the Salmon’s back-and-forth path during its life cycle.

The spirit of the world manifests itself in what makes us human, our creativity, poetic nature, genuinely new ideas and imperfections. Rudolph speaks of Max Horkenheimer’s pessimistic view of the trajectory society and human history has been steering towards, worrying about a strictly systematically organised, highly efficient world. One that works so exceptionally well that it would generate a global equilibrium, eliminating discrepancies between the first, second and third world and any other negative differences. As Horkenheimer describes in 1966, this advanced society would also be devoid of the human spirit in a sense, as such an optimised system would not allow for creative liberty, emotionality or imperfection. Rudolph recommends to take Horkenheimer’s view of the world with a grain of salt, as it is a product of the cold war and very much influenced by the current geopolitical situation of the Soviet Union. Horkenheimer does, however, refer to another now global superpower that was at the time of the cold war not in the center of attention: China.

This is where Rudolph’s analyses and thought experiments begin. Rudolph speaks of the rapid evolution of China’s industry 4.0 and equates it to our modern definition of development and human advancement. China has been copying industrial practices from the west for the last 50 or so years and in doing so has become frighteningly fast at it. China’s leader Xi Jinping aims to develop China into a global superpower, a system transcendent of national government. This is where Rudolph sees the spawn point of the spirit of the world. Historically, and eurocentrically, it is often said that human history started in the east and has moved to the west, where it manifested itself in the land of opportunity, the USA. As the western world was busy hashing out the cold war, China has been quietly developing its industrial sector, infrastructure and governmental tactics to become the explosively expanding nation it is today. The world’s spirit seems to have managed the leap over the pacific ocean and looks as though it wants to return to its origin, the eastern world.

This substantial developmental speed of China’s industry 4.0 naturally includes AI. China’s unique governmental structure has made it the current leading provider of what Rudolph calls the core resource of AI: human data. Furthermore, China has been filing two to three times the amount of AI patents compared to the second largest player in AI, the USA. Rudolph calls this the first time in post WWII history that a key technology is in the hands of someone other than the USA, and in the first time of recorded capitalism, someone outside the western world.

Rudolph goes on to theorise about the possibility of AI overtaking China in its race to becoming a potential world leader. One of the main criticisms of the ability of AI that Piringer also experienced in the creation of his poetic experiments with GPT is Ai’s lack of emotions, human imperfections and genuinely novel creative concepts, qualities that resemble Rudolph’s understanding of the spirit of the world. He dismisses this weakness of AI in regards to its leadership ability entirely, arguing that the world has seen countless leaders that show little sign of morals, emotions or creative capacities. What AI excels at is systematic and schematic understanding, which he considers key leadership qualities. This hypothetical world ruled by AI could be seen as Horkenheimer’s feared structurally perfect world – a system devoid of imperfection, poetically originating from the birthplace of the world’s spirit, where it shall find its death, too.

Source:

- Moritz Rudolph, Der Weltgeist als Lachs [2021], Berlin: Matthes & Seitz Berlin Verlag 2021.

Further possible literature:

- Nick Bostrom, Superintelligenz. Szenarien einer kommenden Revolution. Aus dem Englischen von Jan-Erik Strasser. Berlin: Suhrkamp 2014; Ray Kurzweil, Menschheit 2.0. Die Singularität naht [2005]. Aus dem Englischen von Martin Rötzschke. Berlin: LoLa Books 2013.

- Marshall McLuhan, Die Gutenberg-Galaxis. Das Ende des Buchzeitalters [1962], Bonn: Addison-Wesley 1995.

- Georg Wilhelm Friedrich Hegel, Vorlesungen über die Philosophie der Geschichte [1830/31]. Frankfurt: Suhrkamp 1970.