This blogpost is about my first setup for experimenting with camera-based object tracking. I want to explore different tools and technics to get in-depth experience for real time object tracking in 2D and 3D-space.

For my examples I have chosen the following setup:

Hardware:

- Apple iPad Pro

- Laptop (Windows 10)

Software:

- ZigSim Pro (iOS only)

- vvvv (Windows only)

- OpenFrameworks

- Example: Tracking Marker in ZigSim

As ZigSim uses the inbuild iOS ARKit and calculates the coordinates within the app, we need to follow the documentation on the developer’s homepage.

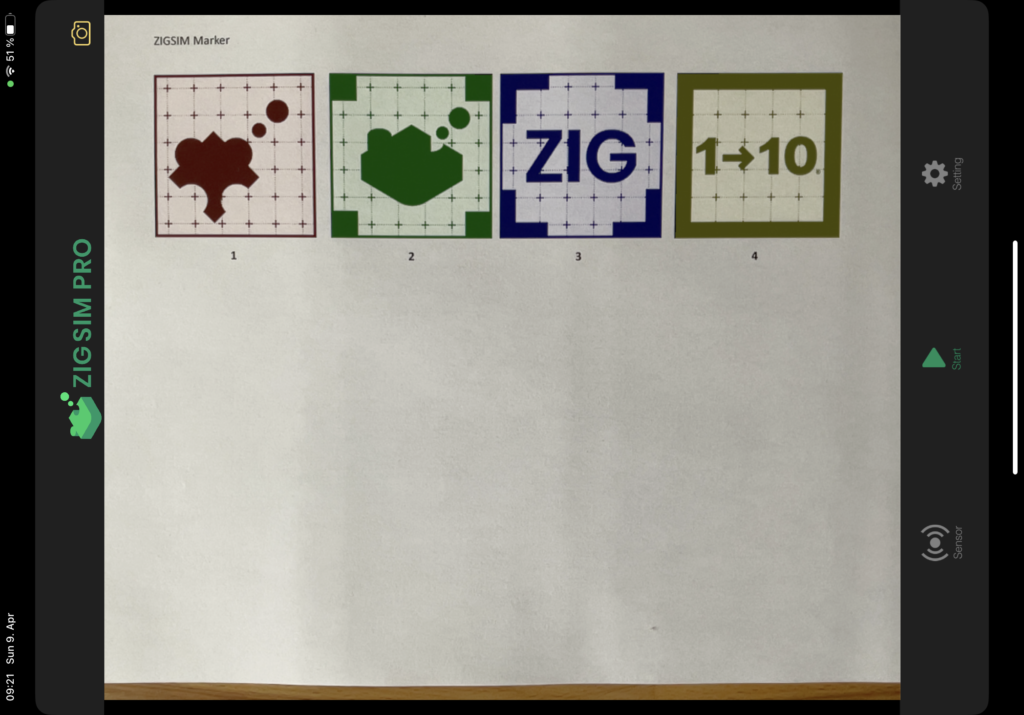

ARKit in ZigSim has 4 different modes (DEVICE, FACE, MARKER and BODY) – for the moment we are only interested into MARKER-Tracking which can track up to 4 markers.

The marker can be found here: https://1-10.github.io/zigsim/zigsim-markers.zip

OSC Address:

- Position: /(deviceUUID)/imageposition(MARKER_ID)

- Rotation: /(deviceUUID)/imagerotation(MARKER_ID)

- Example: “Hello World”

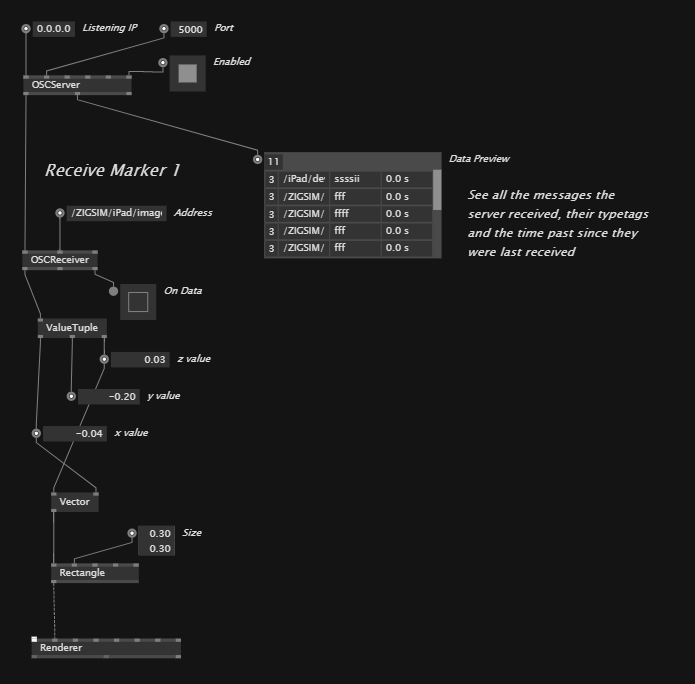

In this example we want to establish a connection via the OSC Protocol between ZigSim (OSC sender) and a laptop running vvvv (OSC receiver) which is a visual live-programming environment.

Here you can see the vvvv patch which is a modification of the inbuild OSC example:

- Tracking the marker

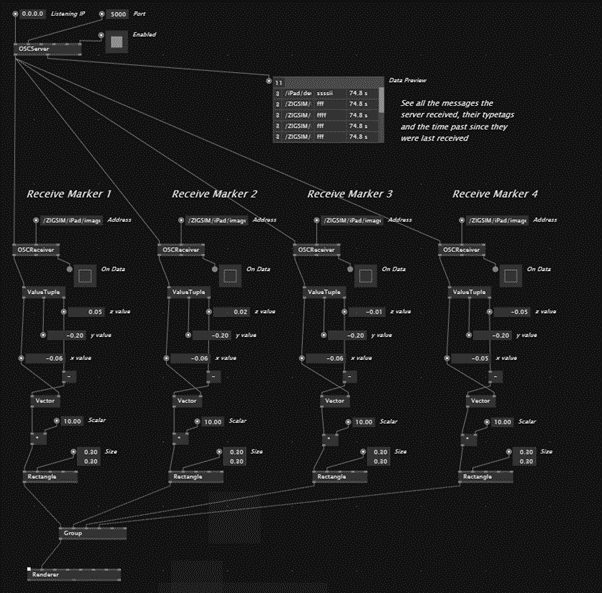

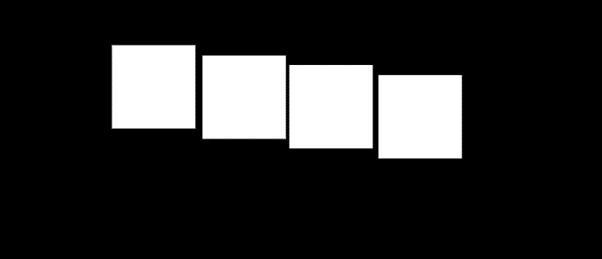

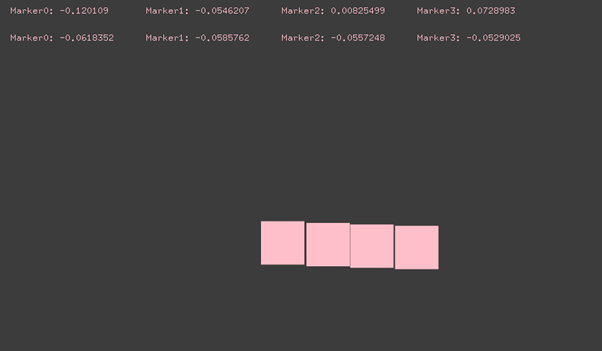

In our next step we will track the location of four marker via ZigSim, transfer the coordinates to vvvv and draw a rectangle for each marker.

- Example: OpenFrameworks

In our last step we will compare the workflow of the node-based environment vvvv with the one from OpenFrameworks which builds on top of the C++ programming language and is being programmed in Visual Studio or Xcode.

void ofApp::update(){

while (osc.hasWaitingMessages()) {

ofxOscMessage m;

osc.getNextMessage(&m);

if (m.getAddress() == "/ZIGSIM/iPad/imageposition0") {

osc_0x = m.getArgAsFloat(2) * (-2);

osc_0y = m.getArgAsFloat(0) * (2);

}

if (m.getAddress() == "/ZIGSIM/iPad/imageposition1") {

osc_1x = m.getArgAsFloat(2) * (-2);

osc_1y = m.getArgAsFloat(0) * (2);

}

if (m.getAddress() == "/ZIGSIM/iPad/imageposition2") {

osc_2x = m.getArgAsFloat(2) * (-2);

osc_2y = m.getArgAsFloat(0) * (2);

}

if (m.getAddress() == "/ZIGSIM/iPad/imageposition3") {

osc_3x = m.getArgAsFloat(2) * (-2);

osc_3y = m.getArgAsFloat(0) * (2);

}

}

}

Note: While both tools (vvvv and OpenFrameworks) have their own advantages and disadvantages, I am curious exploring both approaches to find the best workflow.