For this entry I wanted to finally sit down and have a look at Adobe Photoshop’s own text-to-image based AI tool, generative fill. This feature was introduced with Photoshop (Beta) 24.7.0. and works off Adobe Firefly, a group of generative models based on Adobe’s own stock images. It allows the user to generate images based on text prompts to remove, change or add to an image inside of Photoshop. The tool currently only supports english and is limited to the beta version of Photoshop.

Basic functionality

For starters, I wanted to have a little innocent fun with the tool’s primarily intended use, which seems to be photo manipulation. For this, I naturally needed to use a picture of my cat. First, I turned the portrait picture into a landscape version, for which I left the text prompt empty and generative fill gave me three versions of the generated content to choose from:

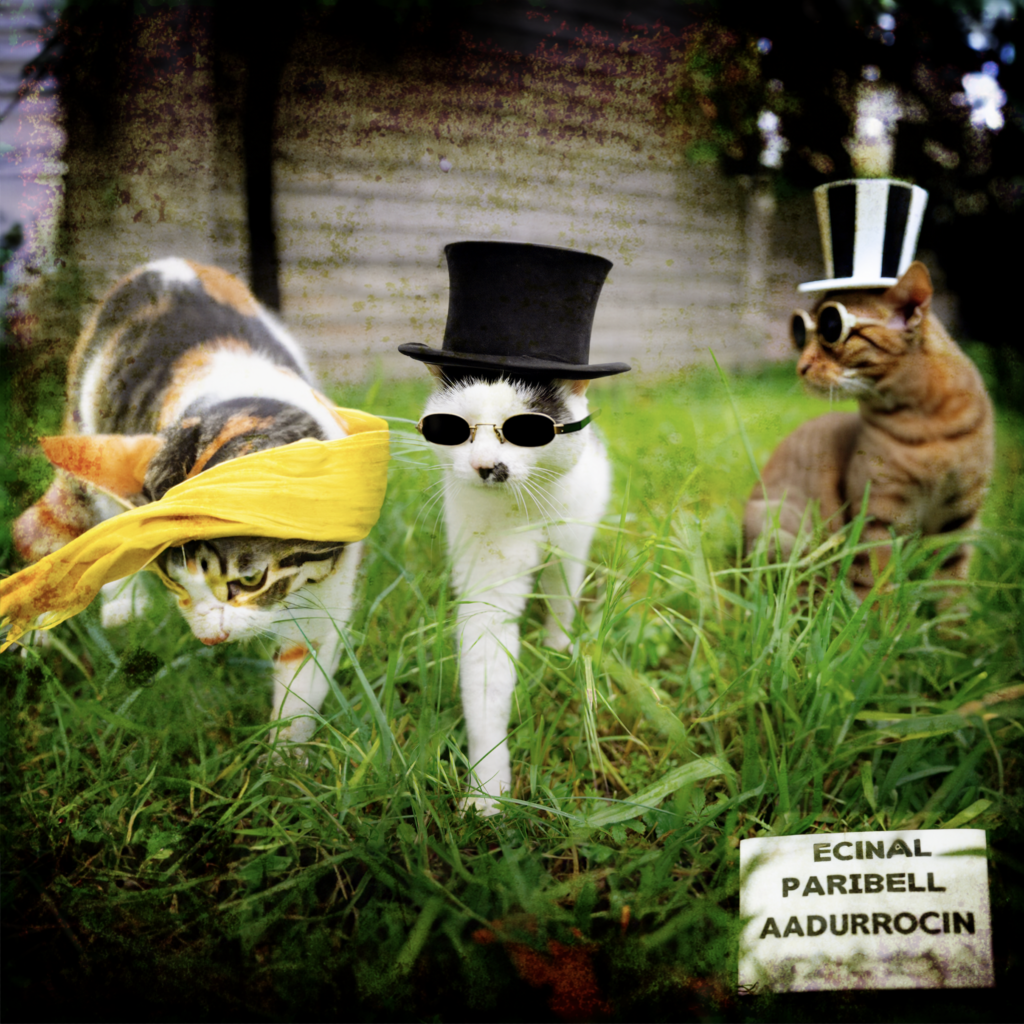

Next, I gave her some accessories for the summer and some friends. The AI was able to generate convincing fellow felines but struggled with giving the left cat glasses so I changed the prompt to a scarf and it worked more or less. I assume that the AI starts lacking in performance quickly when it needs to reference itself. This is when I noticed that files with generative layers get large fast, this particular file takes just under 5GB of storage, at a resolution of 3000 x 3000 pixels. I want to add that performance is excellent, as the first step of filling out the original photograph to an astounding 11004 x 7403 was a matter of seconds.

After expanding the image even further to end up with a square, I wanted to test the AI’s generative capabilities with a somewhat abstract prompt: I told it to generate some grunge for me:

To my surprise, the results were not only promising but, standing at 3000 x 3000 pixels, usable as well. After a quick kitbash to generate a fictional album cover of the artists below, I stopped myself to examine another possible use case.

Image to Image

The tool seems to work best with real photographs, likely due to the nature of the data that Adobe Firefly is trained on, but I would need to do more research on that to make a valid claim. For this next test, I wanted to see whether generative fill would be able to take a crude drawing as an input and turn it into a useful result.

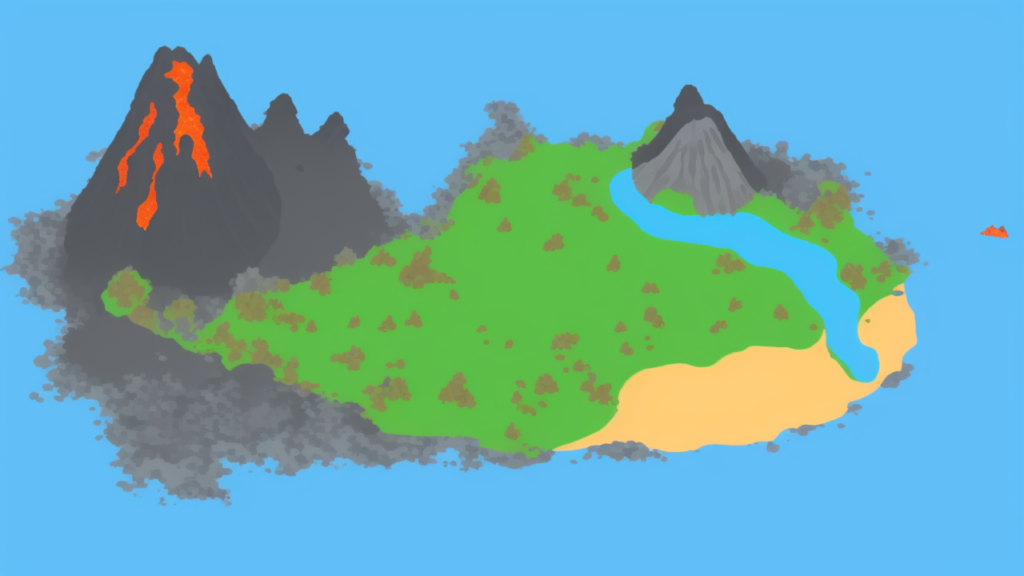

For this, I expertly drew the schematics of an island meant to represent a video game overworld, with a rough estimation of a volcano, a beach and a castle. I then selected the entire canvas and prompted the AI to generate a stylised island with a castle and a volcano upon it.

The results, while technically impressive, don’t take the original drawing into account very well. The AI doesn’t seem to understand the implied perspective or shapes of the crude source image.

For this result, I specifically selected the volcano and the castle and told the AI to generate those features respectively. Interestingly, the AI was able to replicate my unique art style quite well but failed to produce any novel styles or results.

As a comparison, this result was achieved with Stable Diffusion with very basic prompts similar to the one used in Photoshop. Stable Diffusion seems to be much more competent at working with crude schematics provided by the user.

Conclusion

I am deeply impressed with the capabilities of this new feature built-in to Photoshop directly. I suppose that image restoration, retouching and other forms of photo and image editing and manipulation will experience a revolution following the release of the AI. It does come with its limitations, of course, so it will most likely not be a solution for all generative AI use cases. This development has me worried however that I can achieve something meaningful with my thesis. It seems that the most powerful companies in the world are spending exorbitant amounts of money to develop tools specifically designed to speed up design processes. Nevertheless I am eager to find out how the field develops in the near future and will for one definitely adapt this feature into my workflow wherever possible.