Wie bei Blog#1 erwähnt, stützt sich digitale Barrierefreiheit auf drei Säulen.

- Contentmanagement (10%)

- Design (10%)

- Entwicklung (80%)

Da die Entwicklung den größte Teil ausmacht, widme ist ihr einen eigenen Post.

Contentmanagement

Verständlichkeit

Ein wichtiger Grundsatz der digitalen Barrierefreiheit ist die “Verständlichkeit“, was bedeutet, dass digitale Inhalte so formuliert sein sollten, dass sie von allen Benutzer*innen leicht verstanden werden können, unabhängig von ihren Fähigkeiten oder Einschränkungen. Abkürzungen sollten vermeiden- oder zumindest eindeutig deklariert werden. Fremd- und Eigenwörter vermeiden und keine Wörter nur in Großbuchstaben schreiben. So können E-Reader die Grammatik richtig verstehen und flüssig vorlesen.

Einfache Sprache / easy English

KISS Formel: “keep it simple and stupid”. Leichte Texte im Bereich Contentmanagement und Redaktion sind eine wesentliche Voraussetzung für digitale Barrierefreiheit. Damit inkludiert man Menschen mit kognitiven Einschränkungen und/oder sprachlichen Defiziten. Eine klare und einfache Sprache verbessert die Kommunikation für alle Leser*innen und reduziert Missverständnisse.

Weitere Informationen: https://centreforinclusivedesign.org.au/wp-content/uploads/2020/04/Easy-English-vs-Plain-English_accessible.pdf

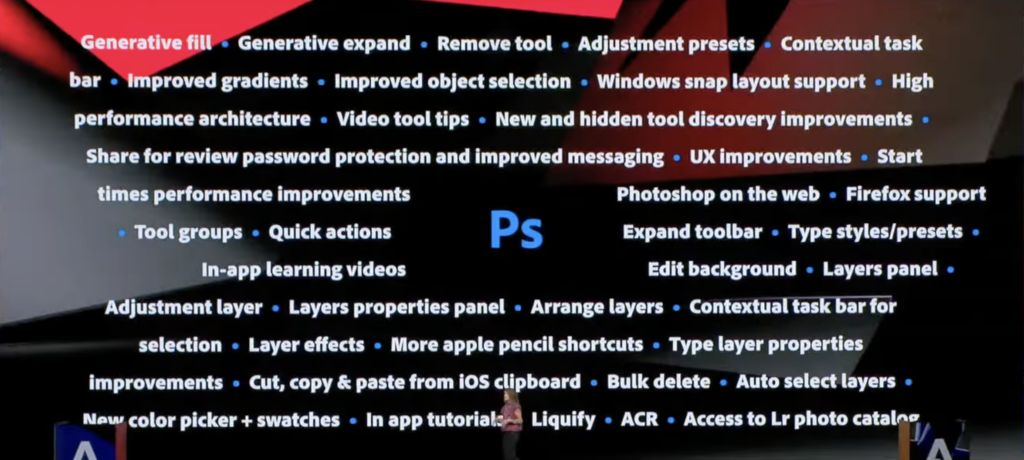

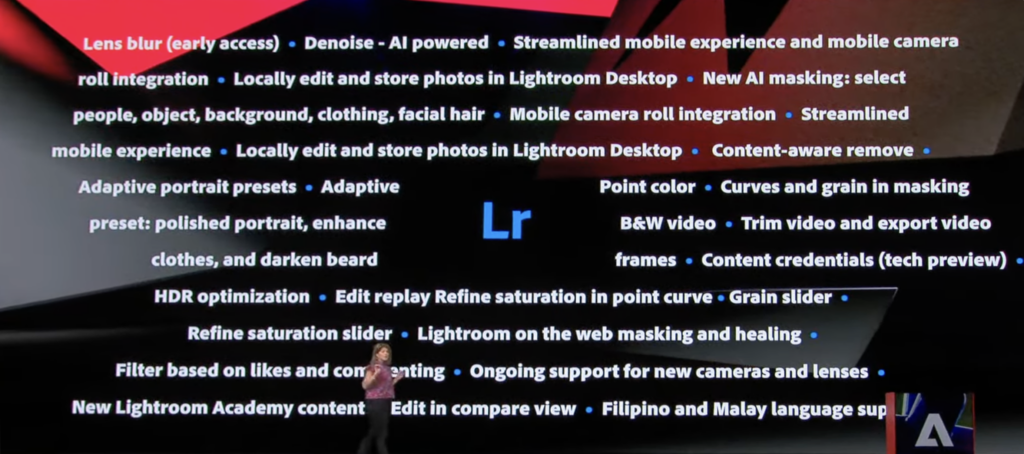

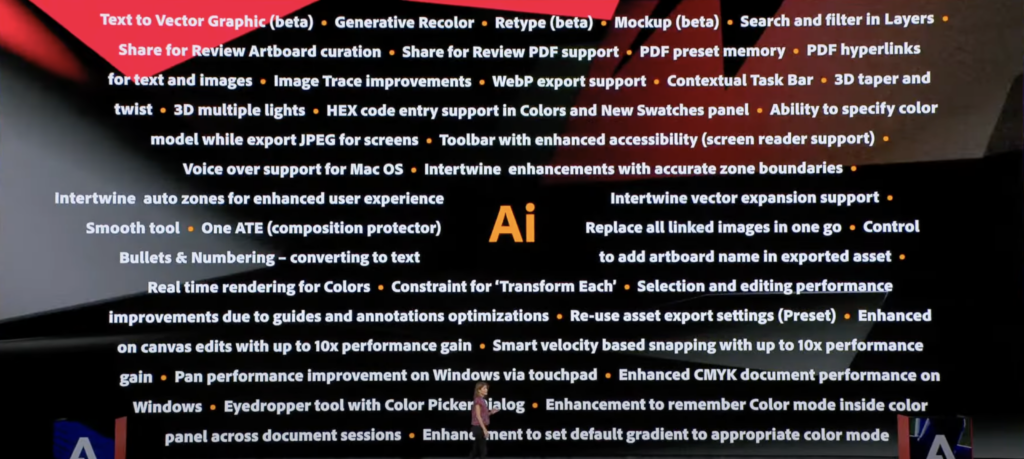

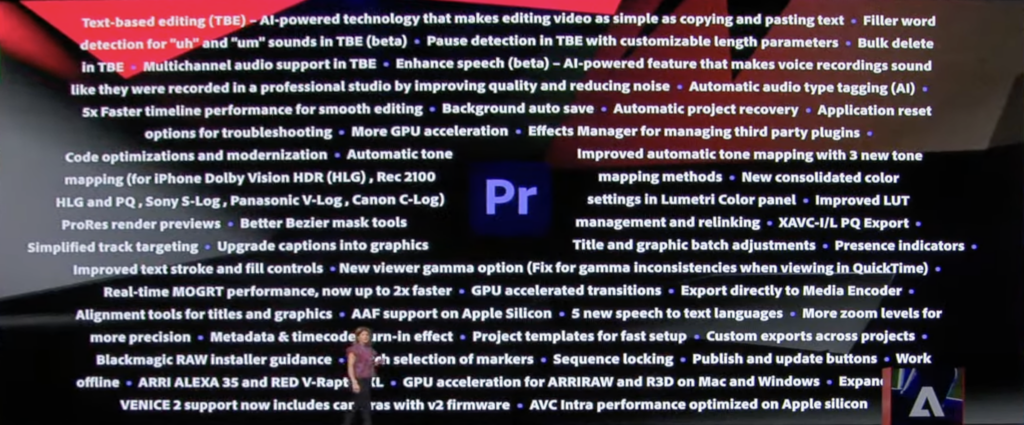

Alternativ Texte

Das sind kurze Beschreibungen oder Texte, die in den HTML-Code von Bildern, Grafiken, Videos und anderen nicht-textbasierten Elementen einer Webseite eingefügt werden. Der Zweck von Alt-Texten besteht darin, das E-Reader eine Beschreibung eines Bildes vorlesen, um visuelle Elemente für Menschen mit Sehbehinderungen oder anderen Einschränkungen wahrnehmbar zu machen.

Strukturierung

Überschriftenhierarchie, Zwischenüberschriften, Absätze, Aufzählungen, kein Blocksatz.

Maßnahmen auch sinnvoll für Seo-Optimierung.

Design

Die Anforderungen für Design gelten auch für Untertitel, welche in Videos angezeigt werden können.

Kontraste

Kontraste von Farben müssen mindestens 3:1 (für AA) und 4,21:1 (für AAA) betragen. Je höher der Kontrast, desto mehr Menschen mit Sehbeeinträchtigung können die Farbgestaltung besser deuten.

Für eine schnelle Kontrolle dafür gibt es Software (wave – web accessibility evaluation tool) https://wave.webaim.org/ oder Browser Add Ons und Erweiterungen wie diverse “Contrast Checker”. Diese Tools liefern aber nur eine 20% – 40% akkurate Ergebnisse und müssen im Nachhinein immer manuell geprüft werden.

Der Blinden- und Sehbehindertenverband Österreich (https://www.blindenverband.at ) gibt auf der Website eine beispielhafte Vorführung für die Anwendung von Farbkontrasten. Mitberücksichtigt wurde hier auch, dass Personen mit Farbenblindheit oder Farbkontrastschwächen, wie z.B. rot-grün Schwäche, im Menü oben zwischen verschiedenen Farbkontrasten wählen können, und dadurch auf ihre Bedürfnisse abstimmen können.

Rot-Grün Schwäche betrifft 9% der Männer und 0,4% der Frauen. (Quelle, https://www.aok-bv.de/presse/medienservice/ratgeber/index_25206.html ; Zugriff am: 02.11.2023)

Absätze, Schriftarten und -hierarchie

- Absätze sollen aus nicht weniger als 60- und nicht länger als 80 Zeichen bestehen. Die Zeilenhöhe beträgt idealerweise 1,5 pt.

- Linksbündig, keine Blocksätze

- Serifenlose Schriften auf Displays bevorzugt. Abhängig von der Displayauflösung können Serifen nur mehr oder weniger korrekt dargestellt werden.

- Wörter in Majuskeln und Kapitälchen(Großbuchstaben) vermeiden. E-Reader können die Grammatik nicht korrekt deuten, verlesen sich oder der Lesefluss im Vorlesen wird behindert.

- Abhängig von Schriftgröße sind condensed- Schriftarten ungeeignet. Eine zu enge Darstellung von Zeichenketten behindert den Lesefluss für Menschen mit Sehbehinderung.

- Die Mindestschriftgröße für Schriften auf digitalen Endgeräten beträgt 16 Pixel (px). Sowohl für Desktop als auch Mobile Ansicht. Je nach Schriftart kann das auch abweichen, gewählt werden sollte eine Schriftart mit exakter Zeichensetzung und wohldosierter Abstand in der Laufweite sein.