In this blogpost we are looking into a second feature of ZigSim which uses a video-over-IP protocol called NDI™ to transmit video and audio captured by the device. The data can be received with any NDI client apps – in our case we use vvvv and OpenFrameworks to get familiar with the corresponding workflows.

Setup:

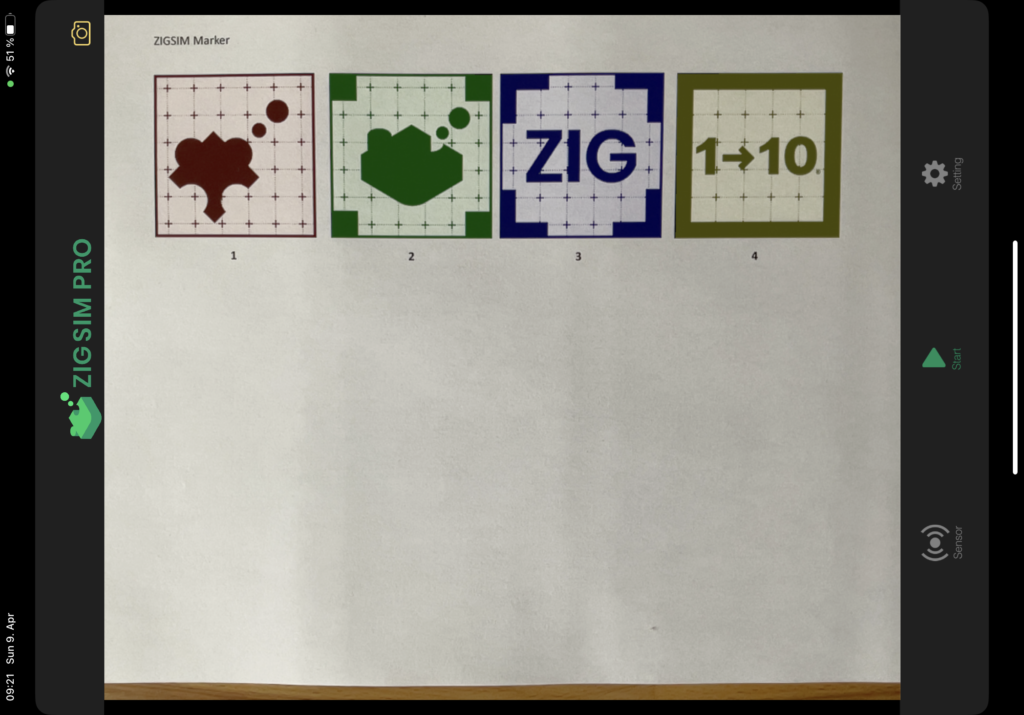

The goal is to setup a connection between our sender (iPad Pro) and receiver (Laptop) to have a second possibility for tracking physical objects via a local network.

First, we need to install the NDI® Tools which can be found here:

They contain several applications (like Test Patterns and Screen Capture) to create NDI Sources on the computer.

For our first test we run the Studio Monitor app and select the broadcast from the ZigSim iOS app.

Note: After some debugging, I found out that ZigSim does not always connect successfully with the computer – without raising an error. So if your device does not show up, just force close the ZigSim app and open it again.

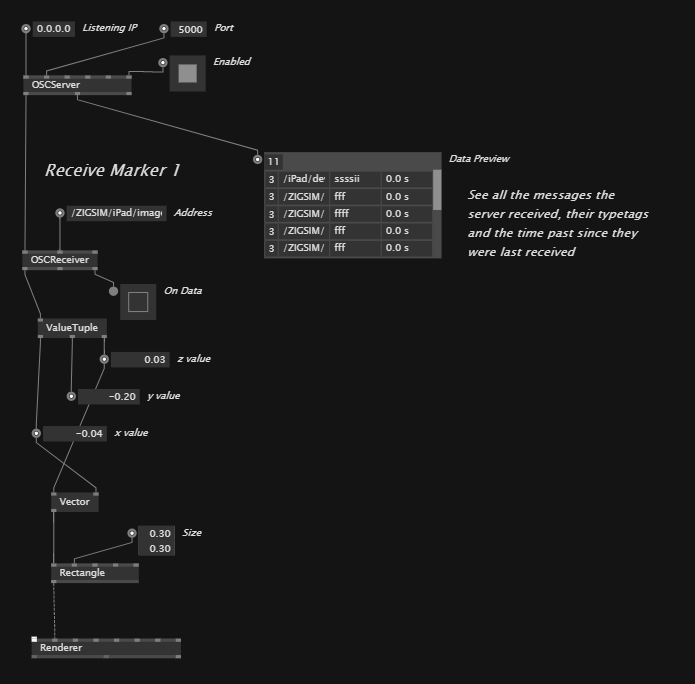

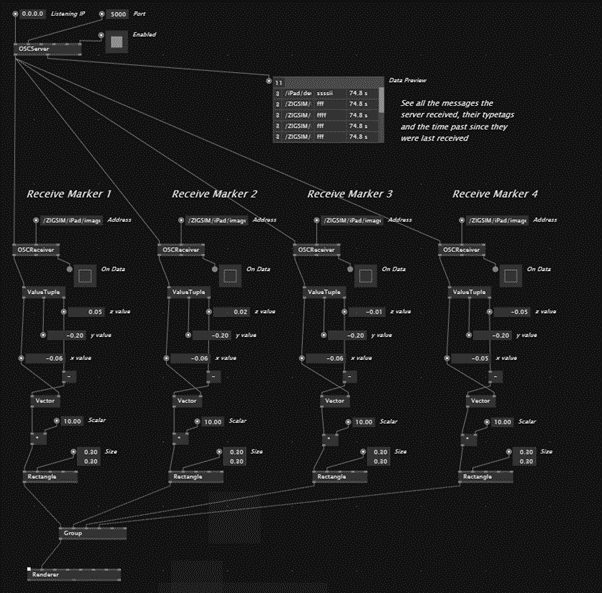

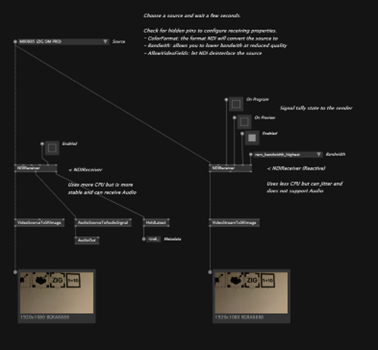

1. Example: Setup for vvvv

For displaying video content within vvvv we need an addon called VL.IO.NDI which can be downloaded under the following link:

https://github.com/vvvv/VL.IO.NDI

Be aware that this addon needs the latest vvvv preview build (5.0) to work properly!

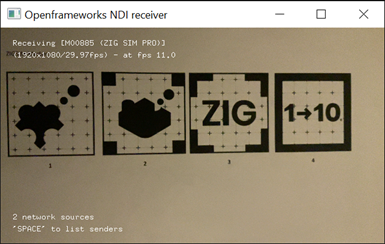

2. Example: Setup for OpenFrameworks

For testing the connection in OpenFrameworks we use the ofNDI addon which can be downloaded under the following link:

https://github.com/leadedge/ofxNDI

After opening the project with the OpenFrameworks project generator we need to build the app in Visual Studio. While running, the app searches for available sources and lets us display the video output within the app.

With the help of various image detection, tracking or machine learning tools like TenserFlow or OpenCV this video source can be processed within vvvv or OpenFrameworks.

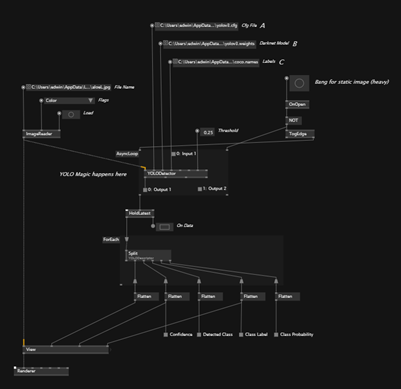

The following prebuild vvvv example shows how the Yolo3 algorithm successfully recognizes objects within a picture. The amount and accuracy depends on the data set which could also be custom made to suite the use case of a given exhibit.