Research question: Is it possible to measure underwater sound by using spacious audio microphone techniques? Is it possible to reproduce an underwater spacial audio?

- Introduction

- Definitions

- Acoustics and psychoacoustics of spatial audio

– How human ear localise a spatial sound?

– - Acoustics and psychoacoustics of underwater sound

-Propagation of signal

– - Building a hydrophone microphone

- Building a hydrophone Microphone Array

- Application of underwater recordings

- Resources:

Websites:

– Capturing 3D audio underwater

– Microphone Array

– On 3D audio recording

Literature:- Jens Blauer – Spatial hearing [The MIT Press, Harvard MA]

- Michael Williams – Multichannel microphone arrays

- Justin Paterson & Hyuonkook Lee – 3D Audio [Routledge]

- Abraham A. Douglas – Underwater Signal Processing [Springer]

- Franz Zotter, Matthias Frank – Ambisonics [Springer]

About spatial audio (development of the concept)

A phantom “image” in stereo

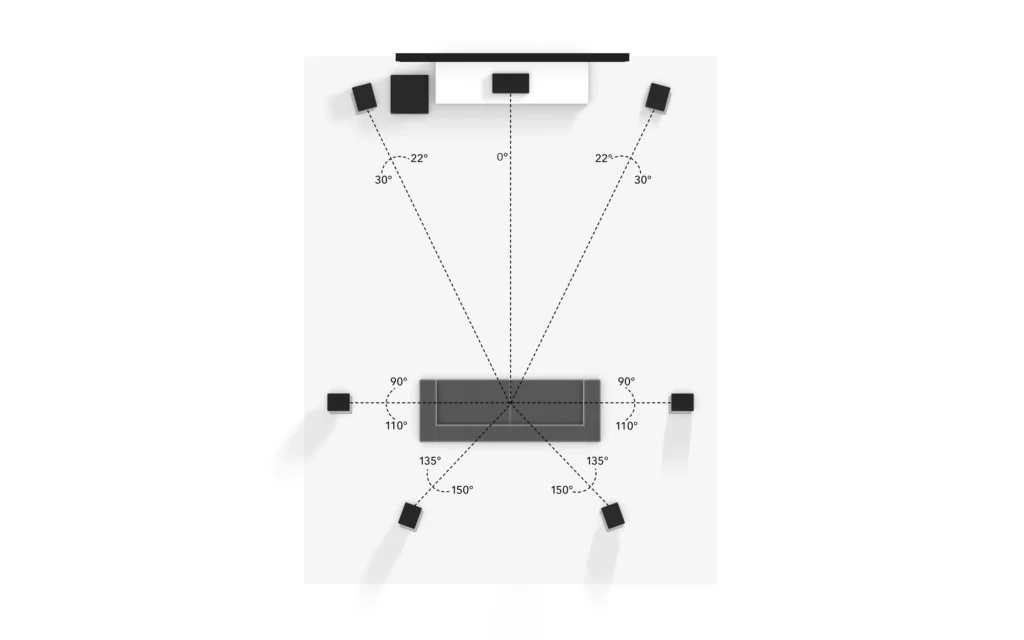

Human ear perceives the sound coming from the front while sending the same signal to both speakers. Changing a gain between the two speakers gives an impression on movement of sound. The phantom changes a position in between two speakers. To not break a central phantom image speakers are usually placed with an angle of 60-degrees. Increasing the angle beyond 60 – degrees might cause a hole in between the speakers, so we lose the perception of central sound and start perceiving sound from independent sources (left and right speaker).

Center speaker

The movie industry decided to add a central speaker in order to compensate the perception of the frontal sound image for the listeners that are not seated at the sweet spot. For those a central speaker works as an anchor and helps to have a more defined and stable sound image.

Surround speakers

In order to improve the movie experiences (by having more realistic set up for a listener), the additional speakers on the sides were introduced. One of the first formats was the LCRS (left, center, right, surround): 5 speakers, 4 channels (the two of rear speakers combined in a single audio channel). In movie theatres surround audio channels are reproduced by an array of speakers. All speakers on a left wall and a right wall reproduce either left or right surround channel. It’s made that way to prevent a phantom hole (the point when you don’t hear any sound coming out) as well as to give to every listener nearly this same surround experience. There is a loose of resolution, the surround sound will become more diffused. In order to reproduce low frequencies the additional speaker was added with its own audio signal. The reason for a separate channel is that humans are not able to localise the direction of low frequencies, so that means that single speaker would not affect a space of the sound image and additionally (since we deal with low frequencies) this channel should be reproduced on higher SPL level than the other channels. Also from practical point of view all movie theatres would have to buy just one speaker instead of replacing the entire sound system with larger speakers. That’s how 5.1 was born: 5 main channels + 1 for low frequencies.

Other Horizontal Layouts (6.1/7.1)

There were other layouts creates, by adding additional channels.

Sony SDDS used 7.1 setup in 90’s (with 5 front speakers and 2 surround channels).

Dolby created a Dolby Digital EX – a 6.1 format with a rear surround speakers.

The traditional 7.1 was created with 3 frontal channels and 4 surround channels, increasing the space resolution on the sides/rear.

Immersive Audio

In order to experience not only the horizontal plane (front, sides, rear), immersive systems were developed. That allows listeners to perceive the sound from all directions, by adding the height component (by adding speakers on the ceiling or on the floor).

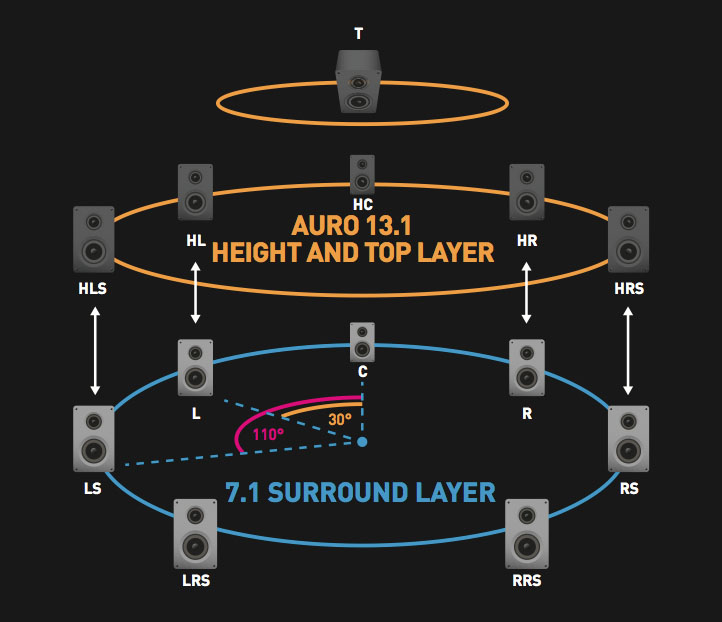

Auro 11.1/ Auro 13.1 a format that includes horizontal plane with 5.1 or 7.1 plus a height layer with a 5.0 setup plus a speaker above the listener.

IMAX 12.0 a format that considers 7 channels at the horizontal plane and 5 height channels, no LFE channel.

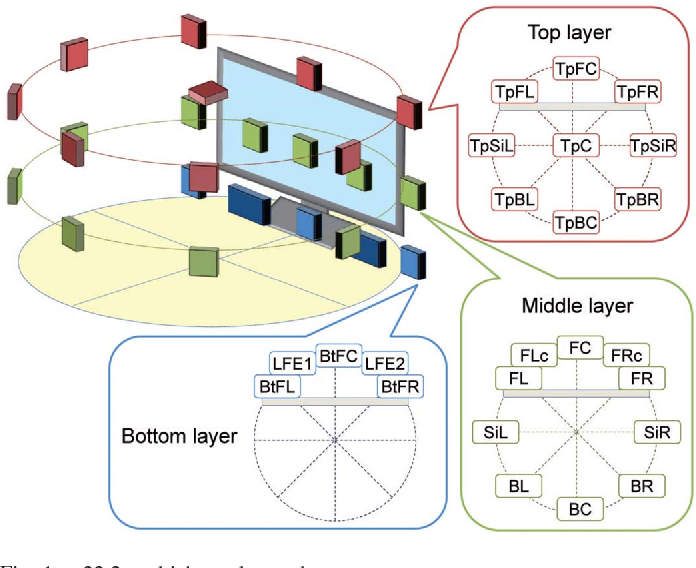

NHK 22.2: consists of 2 LFE channels, a lower layer with 3 speakers (below horizontal plane, with sound coming from the ground), a horizontal layer with 10 channels (5 frontal, 5 surrounds), a height layer with 8 channels and 1 “voice of god”.

2. Definitions

Binaural cues = the most prominent auditory cues, that are used for determining the direction of a sound source.

Channel-based audio = audio formats with a predefined number of channels and predefined speaker positions.

ITD (interaural time difference) = measure the time difference of a sound arriving in the two ears

IID/ILD (interaural intensity difference) = tracks the level difference between the two ears

Phantom image =

Shadowing effect

Vector Base Amplitude Panning (VBAP) = recreation of perception of sound coming from anywhere within triangle of speakers

4. Acoustics and psychoacoustics of underwater sound

Localization underwater – what makes it so difficult?

- From ITD cue perspective: the speed of sound: in air = 343 m/s, underwater = 1480 m/s (depends mainly on temperature, salinity)

“A speed of sound that is more than 4x higher than the speed of sound in air will result in sound arriving in our ear with much smaller time differences, which could possibly diminish or even eliminate completely our spatial hearing abilities. “

- From IID perspective: there is a very little mechanical impedance mismatch between water and out head

“Underwater sound most likely travels directly through our head since the impedances of water and our head are very similar. and the head is acoustically transparent. “

“In air, our head acts as an acoustic barrier that attenuates high frequencies due to shadowing effect.”

“It is not entirely clear whether the underwater localization ability is something that humans can learn and adapt or if it’s a limitation of the human auditory system itself.”