The development of accurate and reliable string tuners has been a subject of great interest and innovation in the field of music technology.

As a result, numerous projects and technologies have emerged, each aiming to provide precise pitch detection and tuning capabilities for stringed instruments. In this analysis, we compare two prominent projects in the field of pitch detection string tuners to identify the most effective approach for advancing my own project:

By analyzing these two projects, I aimed to identify the strengths and weaknesses of each approach and determine the most effective way to proceed with our own pitch detection string tuner project. This analysis will consider factors such as accuracy, precision, responsiveness, hardware components, software algorithms, and potential areas for improvement. The goal is to leverage the insights gained from these existing projects to guide the development of our own innovative and highly precise pitch detection string tuner.

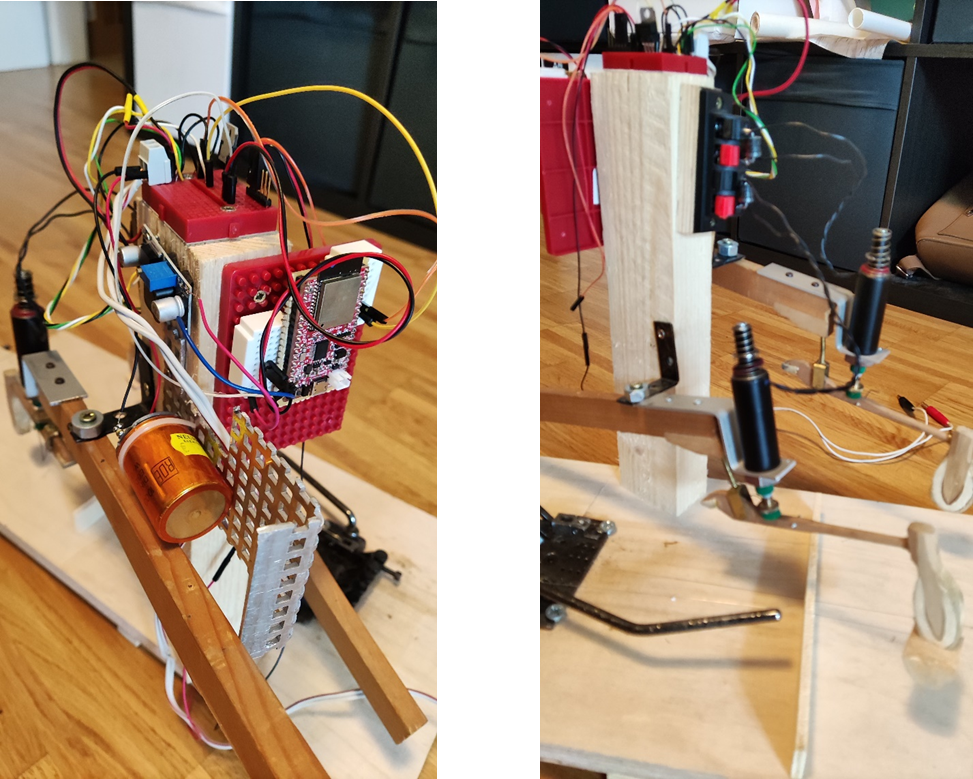

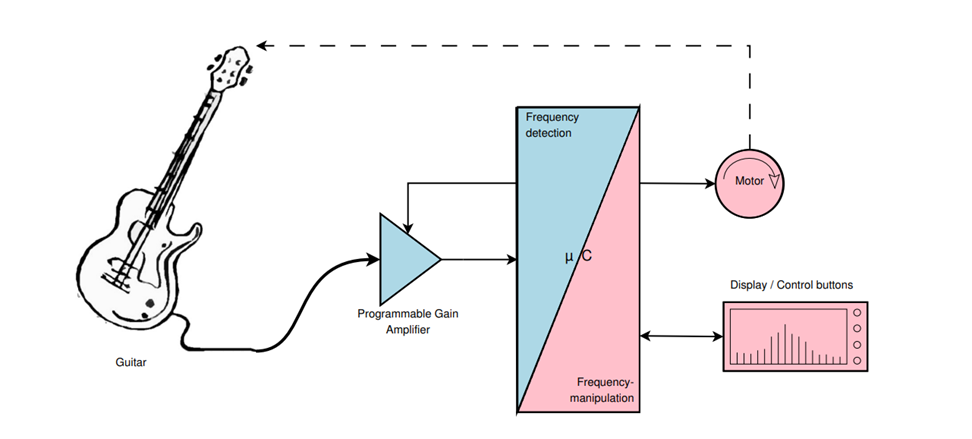

The Motorized Guitar Tuner (Fig. 6) is a hand-held device designed to automatically tune an electric guitar using a micro-controller and a servo motor. The device processes the guitar signal input precisely to provide high accuracy and implements an appropriate controlling algorithm for actuating the motor. The tuning results are quite good, but the accuracy is limited by the interaction of the different strings with each other and the guitar neck. The document discusses various methods for detecting zero crossings and generating PWM signals for controlling a servo motor. In the 2.2.4 chapter, the pure zero crossings method is discussed as a way to determine zero crossings without any previous calculations. However, this method was found to be unusable due to the presence of harmonic oscillations in the guitar string signal. On the other hand, the autocorrelation method was found to be more promising as it can point out periodic components very well, even in the presence of noise or distinctive harmonic oscillations. Therefore, the autocorrelation method was chosen as the preferred method for frequency detection.

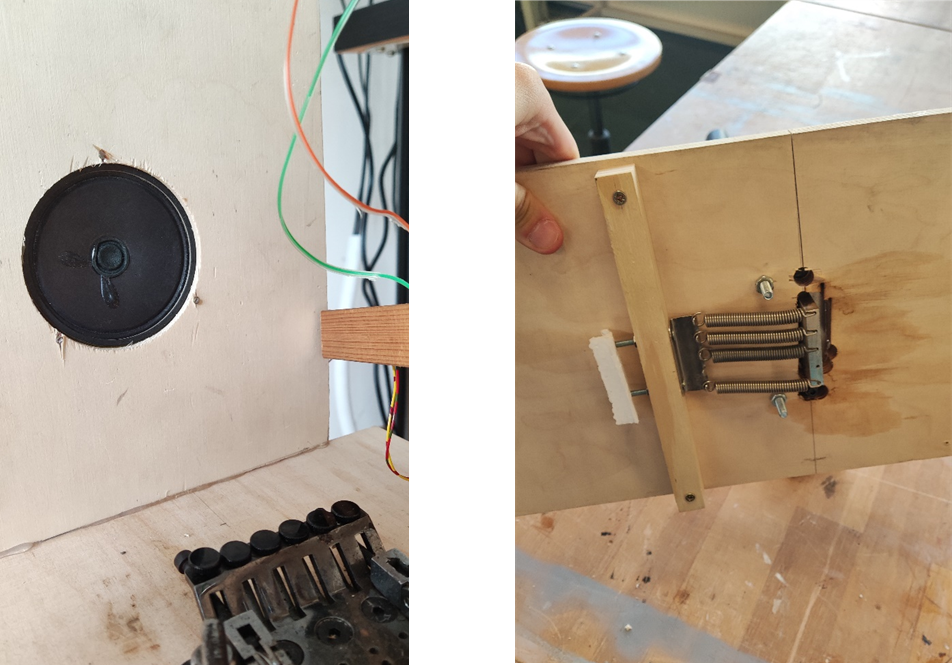

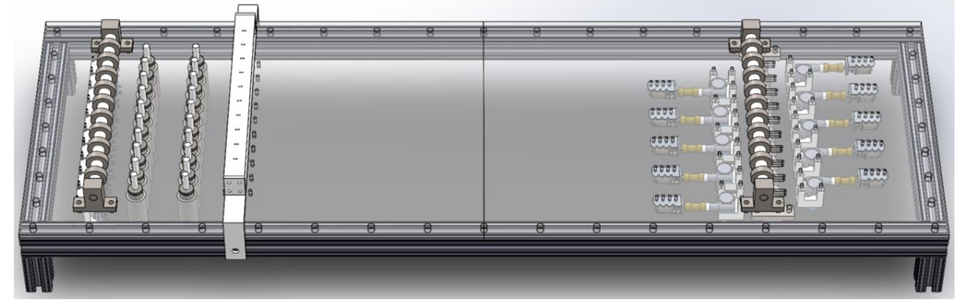

The second project create a mechatronic string instrument called “Cyther V3” (Fig. 7) that can autonomously tune each string during a performance. The tuning system senses string tension, estimates pitch, adjusts the tension, and corrects for errors in estimation using optical pickups. The tuning system was tested and found to be accurate within +/- 8 cents, but not precise enough for error to go undetected by human perception.

The tuning system is designed to sense the pitch of each string in a way that allows the instrument to create various pitch changing techniques like portamento and vibrato at all times during a performance. The system uses tension sensors to sense the tension in each string and estimate the pitch of the string. The actuators of this tuning system should be able to adjust the pitch of any string by a semitone in 100ms or less.

The software has a form of closed loop control to keep every string at a desired pitch. It should adjust the pitch estimation function over time to compensate for small changes to the instrument that alter the strings’ pitches.

The system uses optical pickups to measure the frequency of each string. The frequency information is used to update the curve that relates the frequency of the string to the tension sensor’s potentiometer value to prevent the error in the tuning system from compounding.

[1] TU Graz. Available at: https://www2.spsc.tugraz.at/www-archive/downloads/MGT_documentation.pdf (Accessed: 09 June 2023).

[2] Dynamically tuning string instrument – web.wpi.edu. Available at: https://web.wpi.edu/Pubs/E-project/Available/E-project-012317-195256/unrestricted/Dynamic_Tuning_MQP.pdf (Accessed: 09 June 2023).