For a first experiment to explore the application of AI within the typographic context, I decided to have a look at which tools and software already exist in the moment. In an article titled “Artificial intelligence and real type design” published by type.today several tools and their possible uses and limitations have already been highlighted:

- Midjourney: Midjourney is a software used to create images with the GAN algorithm, but you cannot control the input you feed the algorithm, but Midjourney rather bases its output on the “entire amount of knowledge it obtained during its lifetime” (Shaydullina, 2023). This makes it difficult to control the output, especially when aiming for creating very specific shapes such as letters. In the article, the author suggests however, that one can get a somewhat functional output by using the Blend command to create an arithmetic mean of two different typefaces (Shaydullina, 2023).

- Adobe Photoshop: The author writes that you can use Photoshop’s built-in AI-tool to generate letters that are similar to an uploaded picture, but judges it rather harshly: “Photoshop rarely succeeds in it, however, it usually comes up with recognizable capital letters (Shaydullina, 2023).

In addition, I found several other applications that can be useful in the typographic process:

- Monotype AI pairing engine: This tool by Monotype pairs fonts and gives advice on hierarchy, font size, etc. (Jalali, n.d.).

- iKern: This software developed by Igino Marini automates the task of kerning, that is the determination of glyph’s relative positioning (Marini, n.d.).

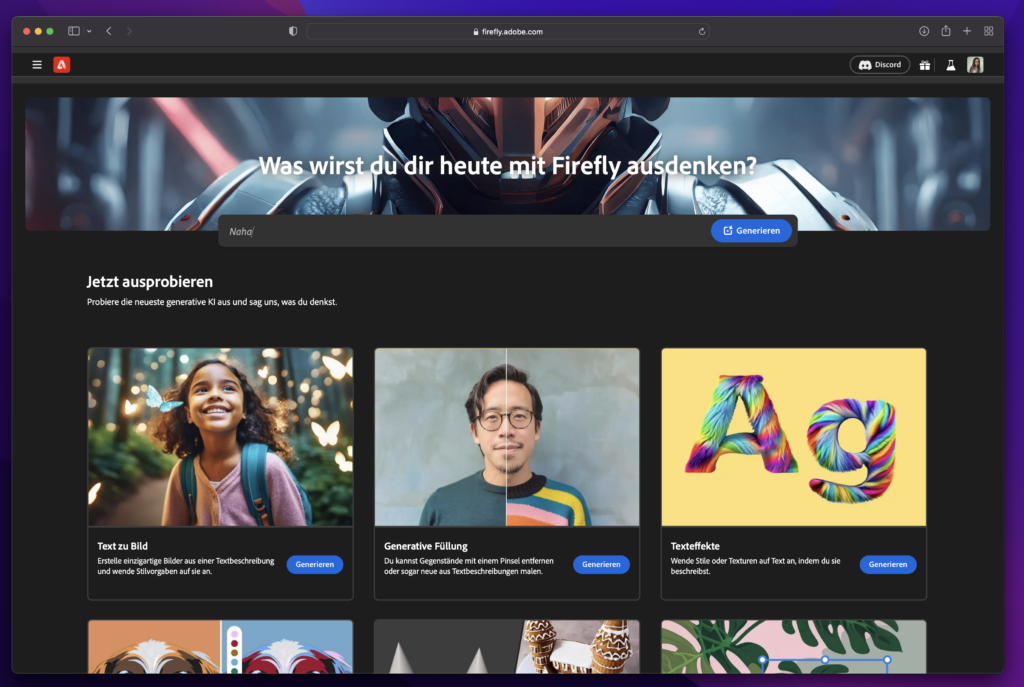

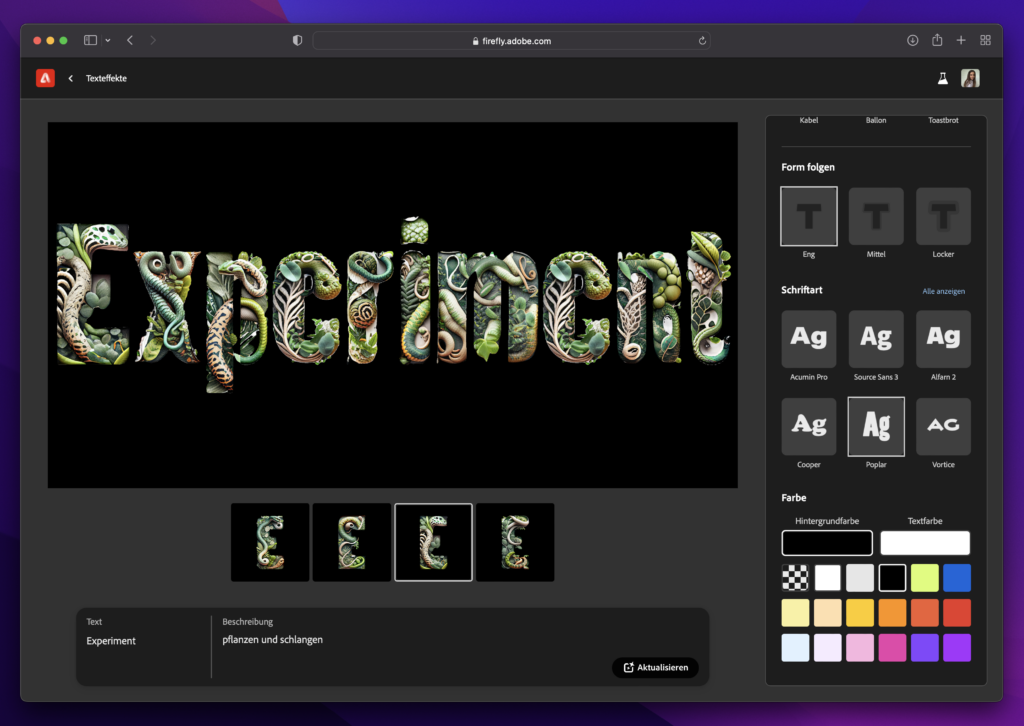

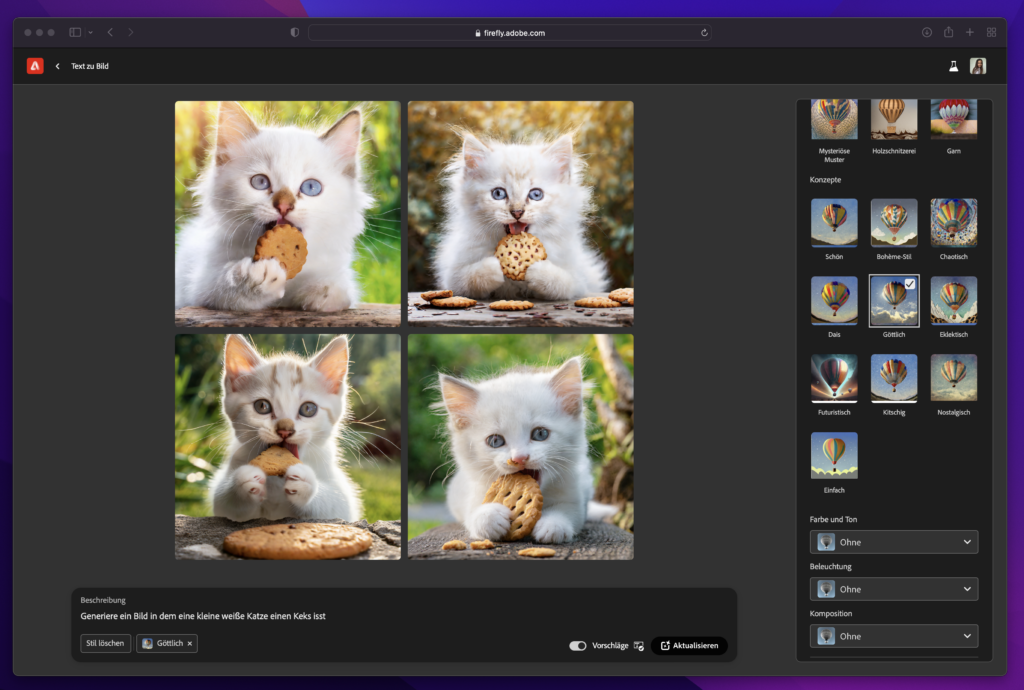

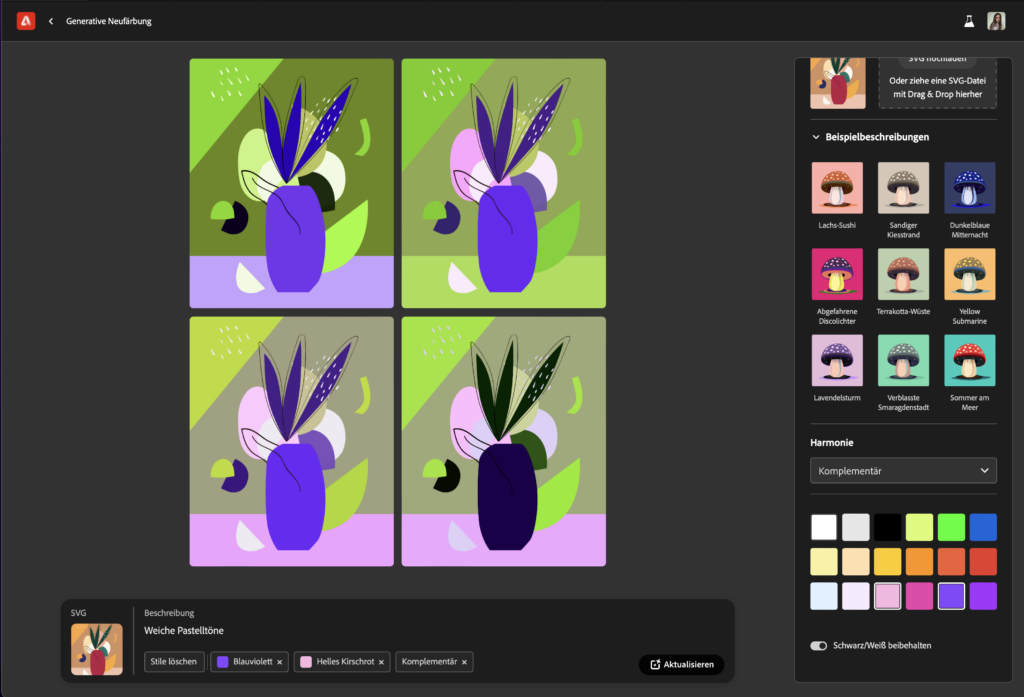

- Adobe Firefly: Adobe’s answer to AI allows you (at the moment) amongst other things to generate images from text or apply textures to words (Adobe Creative Cloud, n.d.). However, both features seem to do not add more options to creating typefaces than the aforementioned tools.

Unfortunately, the main problem using software-solutions that are available on the market to date seems to be the lack of control over the input used to train the AI, if we want to create usable letters. Some designers have, however, already tried to train their own AIs, with many of them using StyleGAN, a style-Based Generator Architecture for Generative Adversarial Networks developed by NVIDIA (NVlabs, n.d.).

In order to get a better overview of all the developments in the AI sphere and to broaden my understanding of what is currently possible, I decided to try out different tools. For this experimentation, I began using the arguably most popular text-to-image AI available: Midjourney.

First experiment: Midjourney

To start it off, I gave the Midjourney Bot the simple brief “the letter ‘A’ in black on a white background” leading to this outcome:

Figure 1: An AI-generated image of the letter ‘A’

Note. Image generated using Midjourney from the prompt “the letter ‘A’ in black on a white background”.

Unfortunately, Midjourney returns images that have textures, color splashes or a 3D effect, so I adjusted my prompt to the following: “the letter ‘A’ in black on a white background as a flat shape –no texture or 3D effect”, which lead to clearer shapes.

Figure 2: An AI-generated image of the letter ‘A’

Note. Image generated using Midjourney from the prompt “the letter ‘A’ in black on a white background as a flat shape –no texture or 3D effect”.

As a next step, I tried to give the AI more detailed input on the type of letter it should produce, adding “in a Serif style” to the prompt.

Figure 3: An AI-generated image of the letter ‘A’

Note. Image generated using Midjourney from the prompt “the letter ‘A’ in black on a white background as a flat shape in a Serif style –no texture or 3D effect”.

Midjourney offers several commands other than prompts to play with the images it creates. I tried out creating variations of a letter I liked (Fig. 4) or varying regions (Fig. 5), with the latter leading to the closest thing of typographic variations.

Figure 4: AI-generated variations of the letter ‘A’

Note. Image generated using Midjourney from the prompt “the letter ‘A’ in black on a white background as a flat shape in a Serif style –no texture or 3D effect – Variations (Strong)”.

Figure 5: AI-generated variations of the letter ‘A’

Note. Image generated using Midjourney from the prompt “the letter ‘A’ in black on a white background as a flat shape in a Serif style –no texture or 3D effect – Variations (Region)”.

A little less successful was my attempt at creating a matching letter ‘B’ to the ‘A’ midjourney created, the output was just any kind of ‘B’ with little resemblance to the original letter.

Figure 6: An AI-generated image of the letter ‘B’

Note. Image generated using Midjourney from the prompt “the letter ‘B’ in black on a white background as a flat shape in a Serif style –no texture or 3D effect – Remix”.

Also when asking the AI to create multiple letters within one picture, the software was not able to fulfil my command in the way I imagined it.

Figure 7: An AI-generated image of multiple letters

Note. Image generated using Midjourney from the prompt “the letters ‘A’, ‘B’ and ‘C’ in black on a white background as a flat shape in a Serif style –no texture or 3D effect”.

As a last trial, I uploaded a picture of three sample letters in a decorative style to Midjourney as a reference image (Fig. 8) and prompted the software again to create the letter ‘C’. Sadly, this only lead to a more “creative” 3D output in the first instance (Fig. 9), and when adding the finer definition to the prompt regarding the styling, to some form of usable shape but not letters of the Latin alphabet (Fig. 10).

Figure 8: Reference image of three letters in a decorative style

Figure 9: An AI-generated image of the letter ‘C’

Note. Image generated using Midjourney from the reference image and the prompt “letter C”.

Figure 10: An AI-generated image of the letter ‘C’

Note. Image generated using Midjourney from the reference image and the prompt “letter ‘C’ in black on a white background as a flat shape in the same style –no texture or 3D effect”.

Learnings from this experiment:

- As of today, and with the current knowledge I have for using the AI, I can generate letter forms with Midjourney.

- However, only single letters can be created and it is difficult to create a second, matching one.

- Only minor influence on the style of the letters is possible; adding a reference image is not working properly.

As fun as this first experiment was, it seems to me that Midjourney is in the moment not really of use within the creation of typefaces or type setting, but I will explore the possibilities more deeply in the future.

References

- Adobe Creative Cloud. (n.d.). Adobe Firefly. Retrieved November 15, 2023, from https://www.adobe.com/sensei/generative-ai/firefly.html

- Jalali, A. (n.d.). Putting AI to work: The magic of typeface pairing. Monotype. Retrieved November 15, 2023, from https://www.monotype.com/resources/expertise/putting-ai-work-magic-typeface-pairing

- Marini, I. (n.d.). iKern: Type metrics and engineering. iKern. Retrieved November 15, 2023, from https://www.ikern.space/

- NVlabs. (n.d.). GitHub – NVlabs/stylegan: StyleGAN – Official TensorFlow Implementation. GitHub. Retrieved November 15, 2023, from https://github.com/NVlabs/stylegan

- Shaydullina, A. (2023, June 7). Artificial intelligence and real type design. type.today. Retrieved November 15, 2023, from https://type.today/en/journal/fontsai